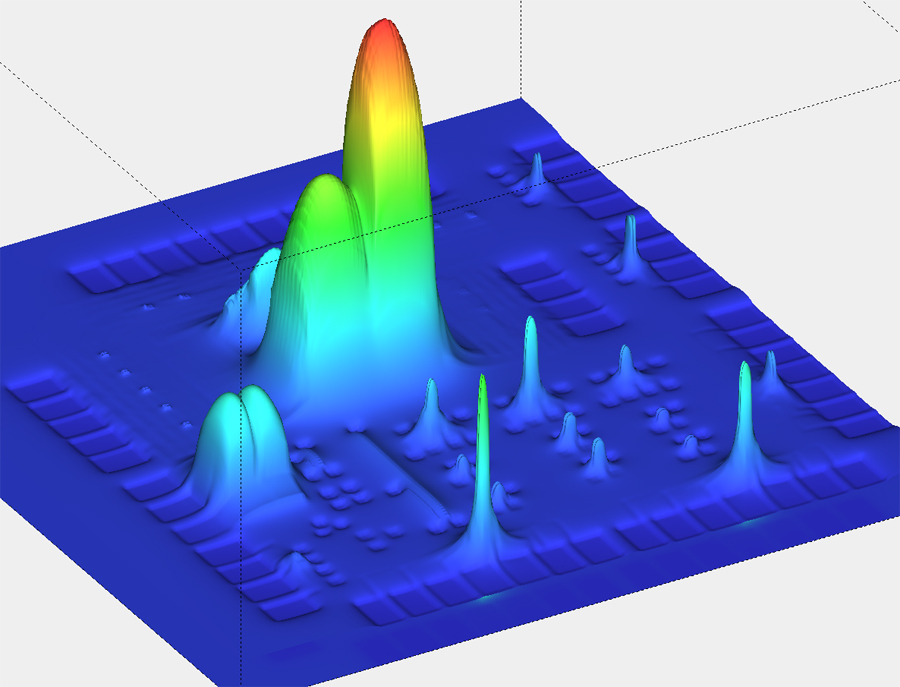

While at Intel I designed DRAMs and some researcher discovered that DRAMs were failing out in the field. These failures were being caused by Alpha particles in the ceramic packages that would strike the memory cell and change the charge in between refresh cycles, thus causing a one-time error. The solution was to literally coat the die with a material that would slow or deflect the Alpha particles and get ceramic packages that were more pure and contained less contamination.

Reading about the unintended acceleration issues faced by some Toyota car owners got me to thinking about a possible cause: Single Event Upset (SEU). We know that SEU becomes a higher probability as the node geometries shrink and circuits become more suceptible. This could explain why the acceleration issue only happens once in a while, and is elusive to create.

Maybe the EDA TCAD companies need to seize the opportunity and promote their SEU simulation tools more widely.

I did a little Google searching for keywords: SEU Toyota

and found the following discussions over at a forum on IEEE Spectrum:

Sung Chung 02.04.2010

I agree with William Price for Toyota’s SUA problems. I’ve been mitigating ground level radiation related SEU (Single Event Upset) issues for many years. Drive-by-wire control system for cars were well researched and SEU tested; SEU mitigation research results are starting to emerge. Safety related functions such as drive-by-wire throttle, brakes, or stability control are all part of SEU study as vehicle electronic technology becomes more complex. For engine control unit (i.e., CPU) manufactured using 65nm or below technology, logic primitive (Flip-flop and primitive gates) SER (soft error rate) starts to exceed that of SRAM memory cells for the same technology node. Without ECC ( Error Correction Code) or proper mitigation techniques, if ECU logic or memory containing the execution code is affected by SEU, the vehicle will exhibit unintended operation. How do we know Toyota has SEU related problems than all current fixes offered in the program? Good question and it’s relatively easy to prove, but it’ll be quite an intense and complex one: perform a accelerated neutron test on the key components and do a statistical analysis using data collected during the radiation test such as acceleration rate, failure rate, number of cars on the road, ground neutron fluence, geomagnetic rigidity etc. The test data will give statistical failure rate of SUA for the different altitude and geographical location. The reason why there are so much speculation in the SUA is it’s a CND (can not duplicate) in nature. The nature of the failure is a “soft error” rather than a “hard error.” It seems SUA failure mechanisms are “soft errors.” Try to find the hard evidence to find the solutions for “soft errors” will be a wrong approach. If the design did not incorporate SEU tolerant architectures including micro codes, it is worth pursuing SEU test since many lives are in the line and the current solutions may not solve root cause of the problem. Contact me for further discussion if interested at schung@eigenix.com.

William Price, Life Member IEEE02.02.2010

I am a physicist, retired after 20 years working at the Jet Propulsion Laboratory and other research labs before that. I have been following the Los Angeles Times articles about the sudden acceleration problems in Toyota and other vehicles. As a recent article has stated, a number of people are thinking that the problem is in the electronics. I do too. However the NHTSA officials have ‘found no evidence that any electronics defect exists in the company’s electronic throttle system.’ The problem, I believe, is that they are not looking for the right cause. I believe that the cause may be a phenomenon known in the aerospace world as ‘Single Event Upset’(SEU). The effect occurs when a single ionizing particle (cosmic ray) impinges on a sensitive part on an integrated semiconductor circuit causing the circuit to trigger some key unintended function. This effect is well known in the aerospace world and been studied for about twenty-five years. High-speed memory circuits are particularly sensitive. In such circuits the effect of an impinging ionizing particle can cause a 1 to become a 0 or visa versa. Other electronic device types are also sensitive to SEU. It has already been proved experimentally that such events can occur on earth’s surface. Solutions to the Aerospace SEU problems have been studied for years and several work-a-rounds exist. I would like the opportunity to talk to someone in the Toyota Company about this problem I need to talk to someone in engineering that is in charge of the electronics controlling the throttle. I can arrange for testing of their circuits using ionizing particles generated in particle accelerators. I can also arrange for experts on radiation environments to assess the probability of upset once the cross section for the effect has been experimentally determined. This testing would have to be done with the circuit engineer in charge of operating the electronics while the circuit is bathed in the particle field. My experience in radiation effects on materials, electronic parts and electronic systems goes back 45 years. I am one of the pioneers in the field. I was a leader of one of the two teams that originally proved that Single Event Upset does occur due to charged particles in Integrated Circuit Memory Devices used on spacecraft. I would appreciate any help you could give in making the right contacts to pursue this possible solution to the problem. William E. Price (Bill) Cell Phone: 760-666-0151 Email: wprice82@gmail.com

FYI – we’ve started one discussion over here on Plaxo: http://www.plaxo.com/groups/profile/eda?n=1

I think we need to be a bit more parsimonious here. What’s more likely: a random bit-flip from a cosmic energy source, or a human-sourced error in the 10s of millions of lines of embedded code found in today’s new cars?

Glenn,

You’re right, the ability to code 10 million lines of code without error is a more likely source of design error.

The odd thing that gets to me is that this acceleration issue is so difficult to reproduce. If it were software induced then I would think that reproducing it would become easier.

Regardless…

Failure of complex electronic and information systems which are ubiquitous in our current products and society can impact us dramatically. From autos thru pacemakers thru advanced weaponry, all dynamics need to be probed.

We need to progress verification to address all sources of known probable failure, because, clearly, the cost of failure is enormous.

It would surprise many product purchasers to know that more-and-more complex systems are going to market without any ability to test even half of the potential system dynamics.

The Toyota problem is a portent of future problems unless the Industry improves its abilities to verify across a wider coverage

Bob,

Thanks for sharing. Our consumer mindset is to simply buy the next model of electronic gizmo because the current model is acting flakey.

At least the auto industry has some semblance of testing and a defined recall procedures to protect consumers.

Now NASA is going to examine possible Electro-Magnetic causes in Toyota vehicle acceleration:

http://abcnews.go.com/Blotter/nasa-scientists-test-toyota-electronics/story?id=10241757

A Toyota brake story where a convicted driver is now set free: http://www.cnn.com/2010/CRIME/08/09/toyota.prison.release/index.html?hpt=T2

All the comments I have read lack of global solution.The only way to really understand the source of the problem is:

-first measure in real enviromnent the system behavior.

-second test the technology of components (memory planes) to get figures on the contribution of noutrons (altitude tests at 2552m with a 6.2 acceleration factor (eg).

-then test the alpha contribution going in a cave below 1.7 km thick rocks (no more neutrons).

We did it and clearly separated neutrons contribution versus alphas from technology.

The answer is “crystal clear”:below 1000m altitude where most cars operate, alpha particles are responsible for the soft errors contributions.

I am prepared to host TOYOTA specialists to demonstrate them the facilities and results that likely will give them “the root of all their evils”

J. Borel, IEEE Millenium medal,retired from exec VP STMicroelectronics Cenral R&D,

presenty “JB R&D Consulting”.

JB R&D consultig

Complete article available on the topic will be sent to automotive manufacturers “on demand”

J. Borel

Joseph,

Thank you for the info on measuring alpha particles in ICs. I’d love to hear of a conclusive test being conducted to determine the source of the spurious behavior in Toyota and other vehicles.

I think most Engineers would agree that simulation-based verification is key for reliable and robust advanced electronic systems.

There are simply too-complex dynamics and too-many degrees-of-freedom for failure that need to be probed to allow bench testing or, as in the auto industry, crash testing.

Moreover, any process or event that has significant impact on yield or reliability can be modeled and simulated.

Verification across the largest variational and environmental body of significant potential variations is required. But, full body coverage may involve hundreds-of-millions or even billions of vectors and it grows all the time.

Of course Intel is on top of this but what about everyone else? It’s possible and necessary for companies with lesser resources than Intel as well if they want to benefit from sub 45nm node tech.

But, it’s (always) important to be aware of and to revisit the fundamental physics of the potential processes in-play. There’s no problem with alpha, no problem with any event or variation as long as the designer knows what the impact and severity is and has tools he/she trusts to deal with the problem.

Unfortunately & too often the fundamentals and accuracy are tossed out in order to get capacity and speed — the Faustian bargain of EDA. In which case, the simulator becomes a variational component, a yield issue, just as much as a thumbprint in the middle of a wafer.

Most EDA tool users would (& will) realize that there are a multitude of physics/EE fundamentals are thrown out the window in the latest-and-greatest new-and-improved EDA toolsets. It’s funny to see that some EDA’s are not even self-consistent in their product lines much less stationary and accurate. Do they not know what accuracy is?

However, fundamental Physics-based verification is indisputable, necessary, and indeed possible and EDA users need to demand more from their suppliers.

Bob,

I appreciate your insights. When I designed chips at Intel my manager would ask me, “So, will this chip work?”

My reply was, “Yes, however only for the vectors that we simulated. Outside of that, all bets are off.”

Obviously with Toyota and other auto manufacturers there is not yet a way to simulate an entire automobile in terms of the: electronics, hydraulics, mechanical, etc. Including all physical interactions with such a complex system makes the engineering team use simplifying assumptions in order to model something exhaustively yet not accurately.

More info on how the Toyota black box has bugs in collecting data… http://articles.latimes.com/2010/sep/15/business/la-fi-0915-toyota-blackbox-20100915