Amr Bayoumi, Ph.D. (Chief Technical Adviser) from New Systems Research spoke with me via Skype on Friday. We had a DAC meeting scheduled but I made a mistake about the meeting location and missed it.

The last company to use a GPU to accelerate SPICE results was Nascentric, and now they’re out of business. They only reported a 4X speedup using a GPU.

Co-founder Yasser Hanafy, earned his PhD at Duke and did work on parallel SPICE (4 CPUs).

The SPICE algorithm spends most of it’s time doing matrix operations, so how do you make it parallel?

They started with three Alpha customers and tuned the SW last year. Now they are in the Beta phase.

Competitors Magma and Cadence have figured out how to use multi-cores to speed up SPICE simulation results.

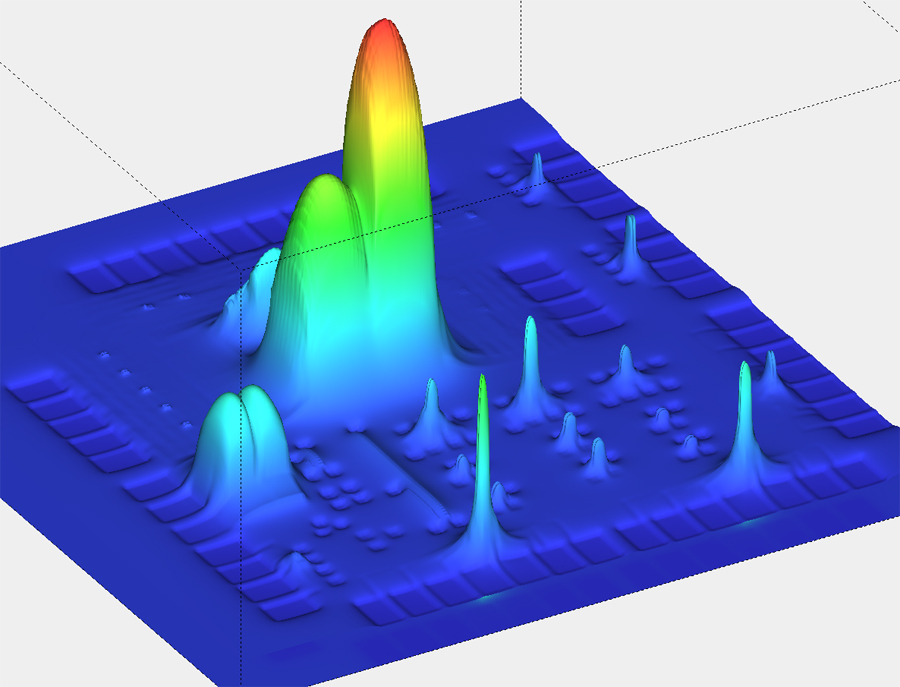

Speed ups seen so far: Device modeling, matrix solving – 70x to 100x on device modeling (GPU plus multi-core). Matrix times are 20 to 30X faster with a GPU than using a single core.

Competitive advantages include: work as engines, so this technology can be re-used with any SPICE circuit simulator. Low overhead company, based in Cairo, Egypt. Have research grants. ATI (co-written article in IEEE in December) work closely with them.

What is the SPICE simulation capacity ?

A: Able to SPICE 1M elements.

What is your Business Model?

A: Want to sell this IP to Cadence, Mentor, Magma, etc.

How many cores can I use with your SPICE tool?

A: 8 processor maximum

What designs work best with your SPICE?

A: Post-layout netlists, large number of RC elements.

What simulation models do you support?

A: BSIM3 or BSIM4 models

Do you exploit netlist hierarchy?

A: Flat simulation, not hierarchical.

When do you expect to be at production release?

A: Production by March 2010.

Summary

This GPU acceleration of SPICE looks very promising so I wonder how long it will be before one of the bigger EDA companies buys this technology.

One comment I heard in a tutorial at DAC, is that GPU’s do not support full IEEE floating point. For Graphics applications a small inaccuracy may be ok, and not not visible in the the final picture(s) rendered. For a spice-like simulation this inaccuracy may be problematic. The situation may become worse with the introduction of OpenCL, where all compute resources could be allocated (in Apple’s Snow Leopard they will be with something called GrandCentral). Ultimately the GPU vendors will have to support IEEE floating point, until then I wouldn’t consider running spice on a GPU.

David,

Great point. I never thought to question the accuracy of the results. I’ll ask ACCIT to post a follow-up reply to the accuracy issue.

Dear David,

This Amr with ACCIT-NSR. This is the most poular question. Actually the reason this project we have chosen to work with AMD’s ATI stream Tech. was the fact that they support hardware 64b double precision arithmatic (when this project started Q1 2008). Please check:

http://ati.amd.com/technology/streamcomputing/product_firestream_9270.html

Now let us talk about error accumulation. The errors comes from large DIV/MULT/ADD/DUB when you solve sparse matrices, especially when it has millions of non-zero elements. In these cases, and at each time point, the last element in the matrix could see the accumulation of errors of 100s of thousands of DIV/MUL operations. This is fully implemented in ATI GPU as IEEE 64b precision in H/W as I mentioned before.

Second source is integration error (called truncation error), and again this is basic arithmetic implemented in 64b IEEE DP in our GPU

Now, for device models such as BSIM4, out of some several thousand line of computational code of C/C++, you will occasionally find a sqrt() or exp(), and this in some GPU generations are typecasted to 64b from 32b IEEE SP floating point (maybe it solved in current generations). The good news that at every time point, the device model gets recalculated, so this extremely minimal does not live forever. Also, the BSIM4 for a postlayout circuit represents a small percentage in the matrix elements compared to R & C elements

Regards

Amr